|

|

||||

BiographyPaul Manstetten was born in Berlin, Germany. He studied Mechatronics at the University of Applied Sciences Regensburg (Dipl.-Ing.) and Computational Engineering at the University of Erlangen-Nuremberg (MSc). After three years as an application engineer for optical simulations at OSRAM Opto Semiconductors in Regensburg, he joined the Institute for Microelectronics in 2015 as a project assistant. He finished his PhD studies (Dr.techn.) in 2018 and was employed as a postdoctoral researcher on high performance computing methods for semiconductor process simulations until 2022. Starting 2019, his activities include teaching assignments with a focus on C/C++ and numerical methods for scientific computing. Currently, his main focus is supporting the restructuring and expansion of the bachelor's program Electrical Engineering and Information Technology w.r.t. the programming lectures lead by the Institute for Microelectronics. |

|||||

Automatic Differentiation in Scientific Computing

Many workflows in the field of scientific computing incorporate optimizations which often require the differentiation of a scalar function with respect to one, several, or all of its inputs. A typical interface to a generic minimization routine will require a handle to the objective function and will additionally provide options for how to obtain derivative information. A straightforward approach is to apply a finite difference scheme to compute first or second order derivative information, if this is required by the selected optimization algorithm. Using finite differences to approximate derivatives requires to control the step width used during the entire optimization process. Additionally, each of the function inputs, relevant during the optimization, requires multiple function evaluations to obtain the corresponding entry in the gradient vector; for functions with large input vectors, this can manifest in a massive computational overhead.

In such scenarios, a viable alternative is to use automatic differentiation (AD) to obtain derivative information of a function. The underlying idea of AD is to represent the function using a computational graph consisting of fundamental operations. The derivative of the function is then obtained by expressing it using the chain rule, whereby the computational graph prescribes the composition, i.e., it must be clear how the Jacobian matrix is formed for each node in the computational graph. Although automatic differentiation is not a new concept, more attention has been given to this method in recent years and has led to great successes, as it is now widely supported in software tools which are tailed to training feedforward neural networks (FNN).

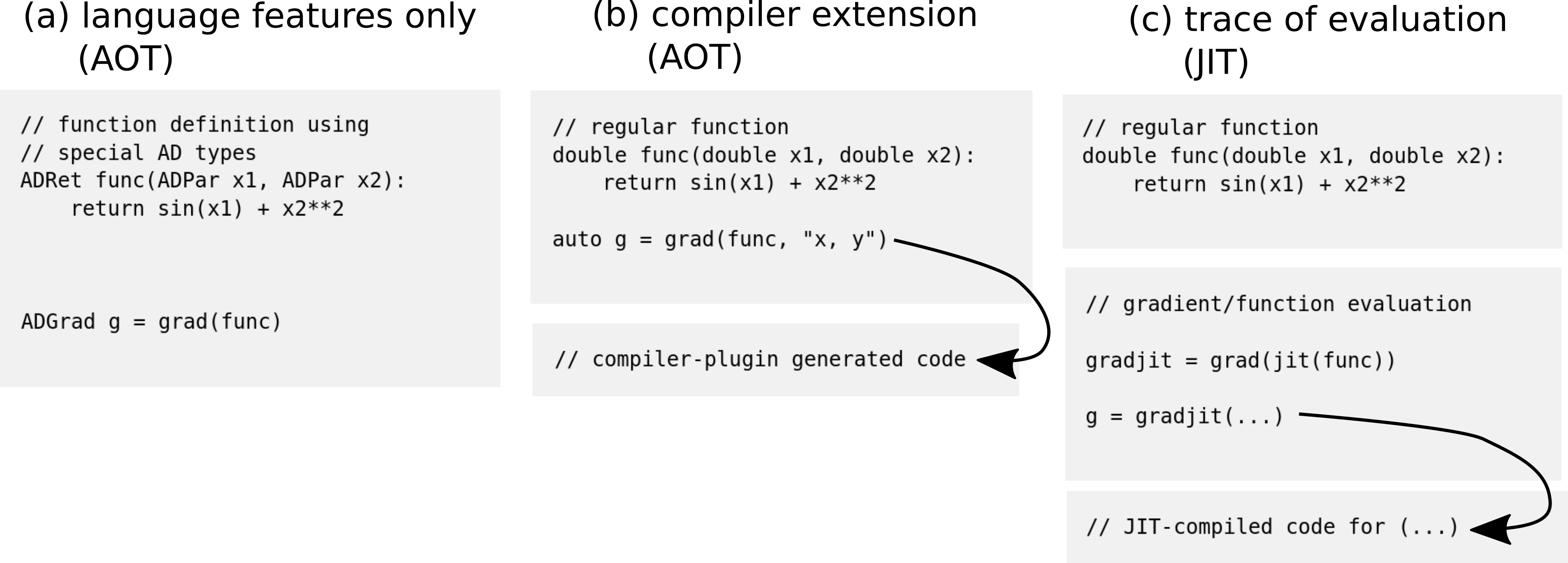

All automatic differentiation frameworks have in common that source code is generated (or combined) according to a detected computational graph of a function. Many other important aspects vary between different automatic differentiation frameworks: In compiled languages like C/C++ there exist implementations relying solely on language features (see Fig. 1a) or on introspection provided by the compiler (see Fig. 1b) to generate (intermediate) source code and compile it ahead-of-time (AOT). This allows to apply optimization, as would usually be expected for these languages. For interpreted languages like Python, performance critical code typically relies on extensions with native backends. A prominent approach (see Fig. 1c) is to generate the computational graph only when the function is evaluated (which simplifies certain aspects in the implementation of AD) and to perform a just-in-time (JIT) compilation for a native backend.

We use automatic differentiation to construct domain-specific covariance functions for a Gaussian process regression (GPR) model from training data which also contains derivative information. The training data originates from density functional theory (DFT) calculations and the GPR model is dynamically constructed during a nudged elastic band (NEB) calculation in order to replace a portion of the computationally expensive DFT calculations.

Fig. 1: Overview (pseudocode) of different implementation approaches for automatic differentiation.